In the post-covid world, retailers are facing huge challenges from increased competition from online shopping, consumers who are more concerned about cost than ever before, and many have ageing IT infrastructure, have outsourced much of their core services, and are trying to adopt the public clouds more than ever before.

This is pushing retailers to look at how they can bring innovation to the Point of Sale, the Stores, and the Data Centers.

The business leaders for a retail company know they need to modernise and improve their IT architecture so that they can try new business models and explore new ideas. They made a lot of technology investments with Red Hat and Azure, but don’t know how to guide the IT department to make best use of this technology to solve business problems. They’ve brought you in as a potential partner to help them on this journey.

You have found that the business leaders don’t speak to the IT department very often, and they don’t share much detail about what they want - they just want something better than they have today. They’ve left you alone as a potential partner, and set you the challenge to use these recent technology investments with Red Hat and Azure. The business leaders will speak with their IT department at the end of the month to find the Top 3 technical implementations, and invite those potential partners to a business presentation to determine the winner.

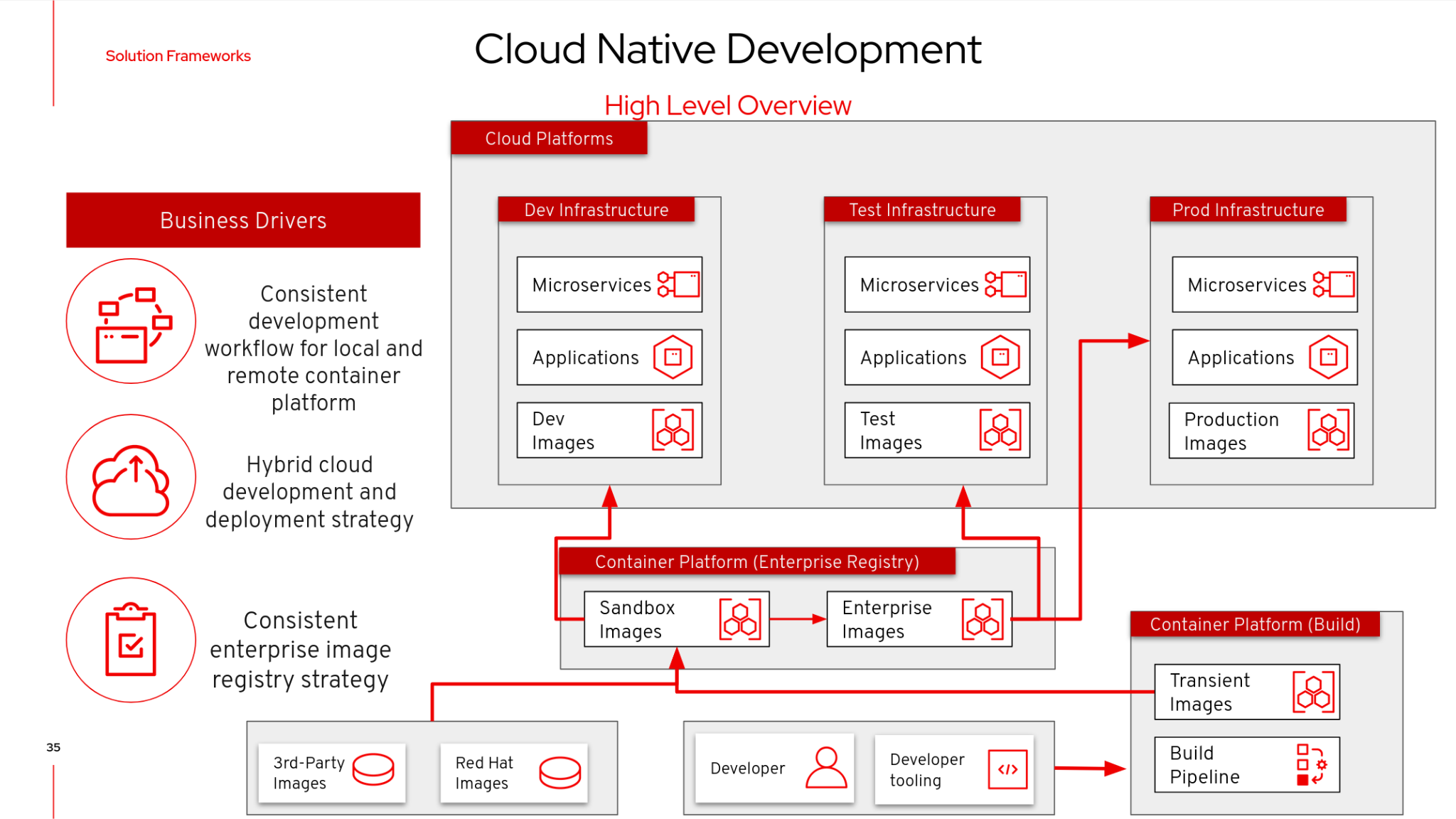

You know the current architecture of the system has many pain points and limitations, and as you explore the architecture over next few weeks, you’re bound to find more issues. It has been “lifted and shifted” into a container environment, but it isn’t really cloud native, yet.

Your main goal is to build upon the template environment that you start with, taking into account the solution frameworks, and enablement you’ve had so far. You have the freedom to improve the microservices, the development process, the cloud adoption or similar.

Your goal is to get the customer to prove to the customer you can use technology to solve some of their business challenges and be a trusted partner.

The business leaders don’t understand the technology, let alone know how to write a requirements specification. However, after a discovery call and talking to some industry analysts, you know roughly what the business is struggling with;

Revenue: It takes days of process and tools to publish new versions of existing services.

Risk: The release processes and change control around these services is nonexistent, there isn’t even a test environment! Changes are made live to production, putting the whole environment as serious risk when developers change things.

Revenue: Business leaders really want to serve the e-commerce market, and extend to web sales, but the current architecture can barely keep up with physical stores!

Risk: It’s almost impossible to know how much inventory is being held at any one time, and in which area of the business.

Risk: The current architecture is so insecure, encryption isn’t being used in most places, data at rest, data in transit, all cleartext.

Nearly all services cannot be monitored! The teams have no idea how to build management dashboards, with metrics and monitoring.

Developers often use laptops that are locked down and slow, they would much rather develop in the cloud, close to the code.

We’ve made an investment in OpenShift, but are not yet using it to it’s full potential - just some applications have been containerized. There are few pipelines, monitoring, logging or similar being used.

You take a deep breath, and keep the business challenges in mind, as you look at this application and architecture described in this document.

You are provided access to 4 environments, the Point of Sale (Fitlet2), the Store (Intel NUC), the Warehouse (Azure Red Hat OpenShift) and the Data Center (Azure Red Hat OpenShift). All these components are connected to each other as part of a system that serves the retailer to make money.

Lastly, you forget for a moment what products you even sell in your store! Thankfully, in this Hackfest, you decide it’s not important, and can choose which virtual products your store sells, while you focus on addressing some of those business challenges.

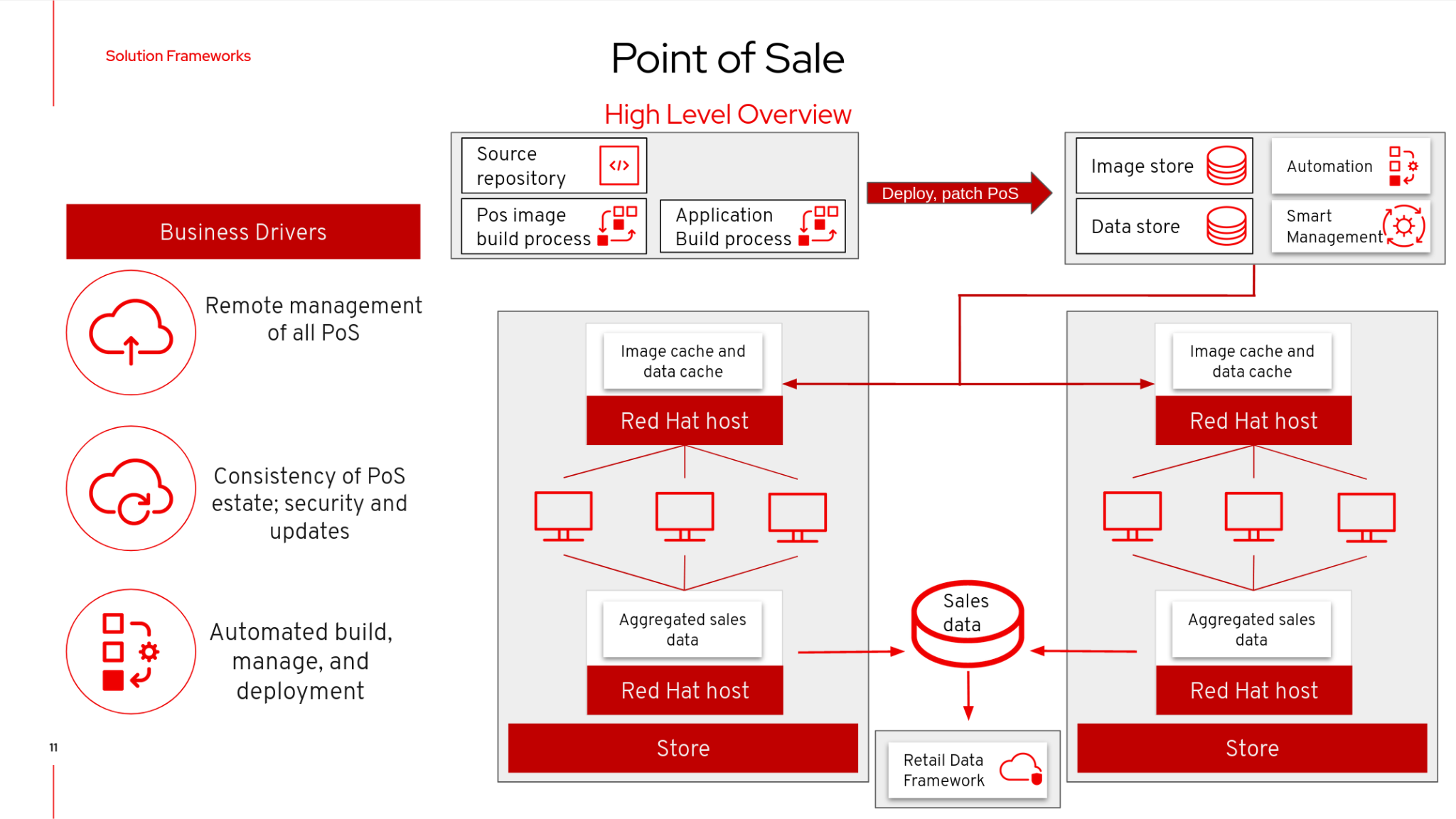

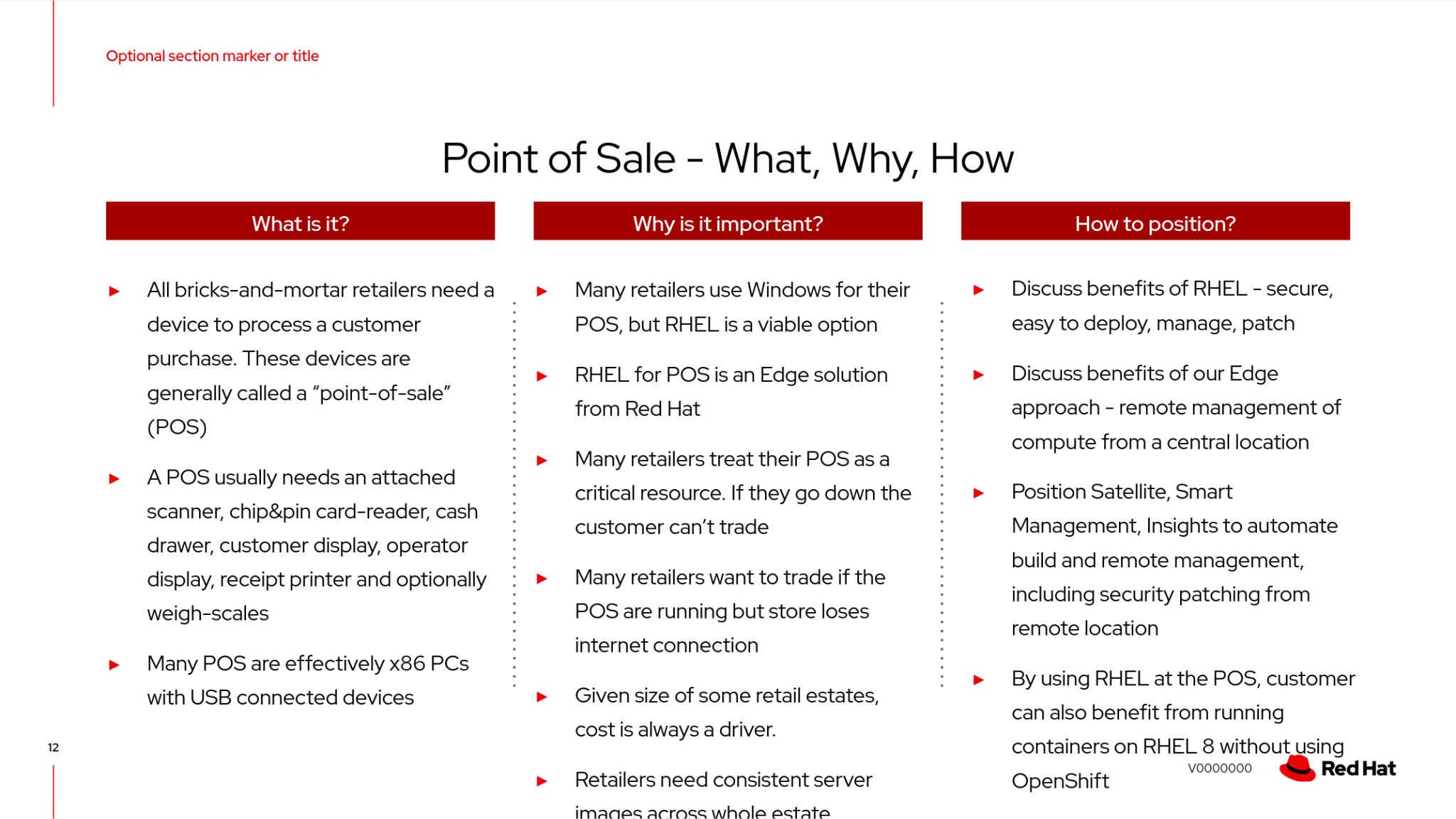

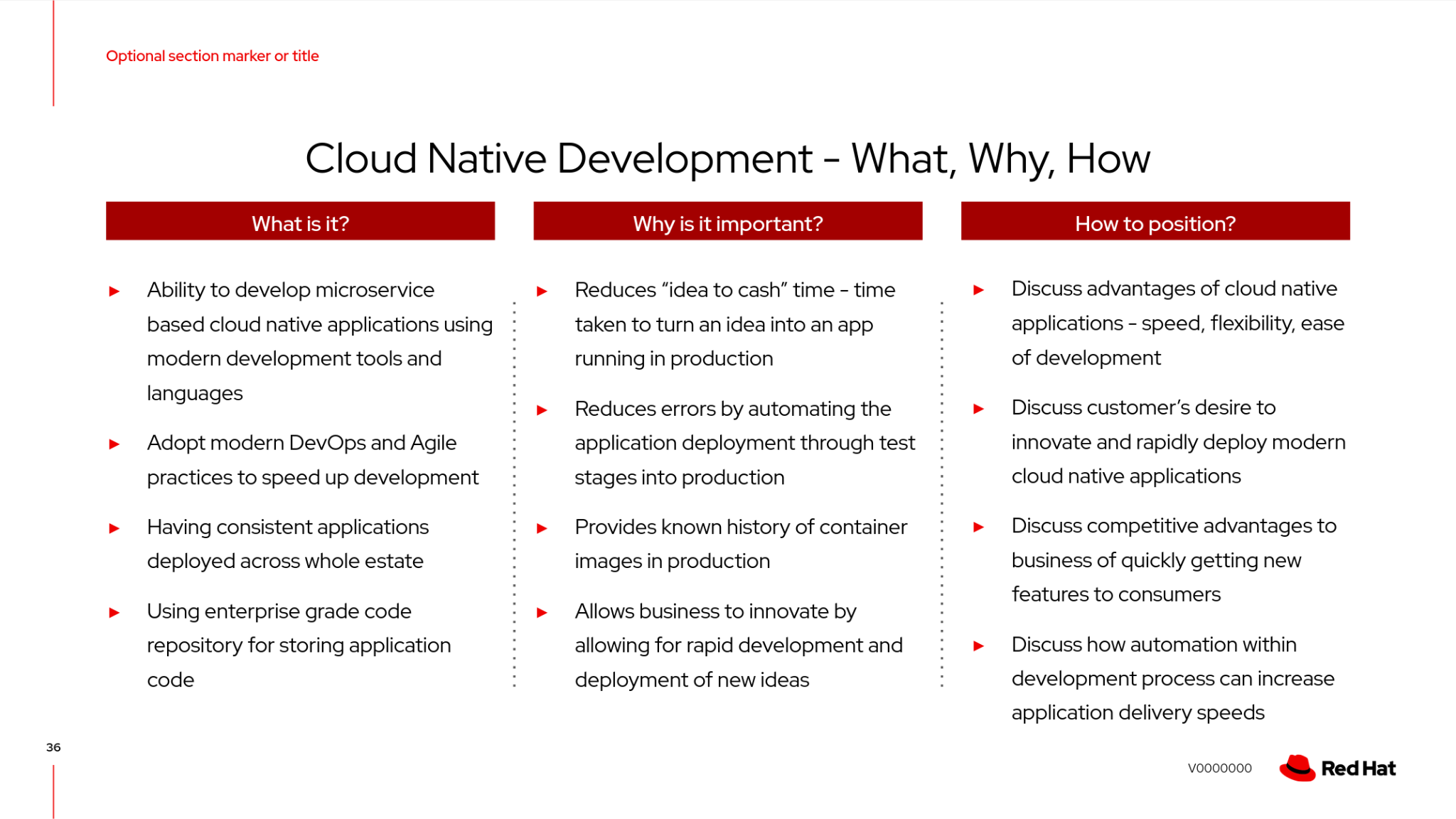

This retail company has been talking with Red Hat’s architects on the topic of validated patterns that could be implemented, on top of 2 key solution frameworks that affect retailers, around the Point of Sale, and Cloud Native development. Your team has been given a high level overview of these solution frameworks, in the hope that your proof of concept developed during the hackfest could contribute to a validated pattern in the future.

The architecture you’ve been provided by the Retailer’s IT department consists of 3 main environments.

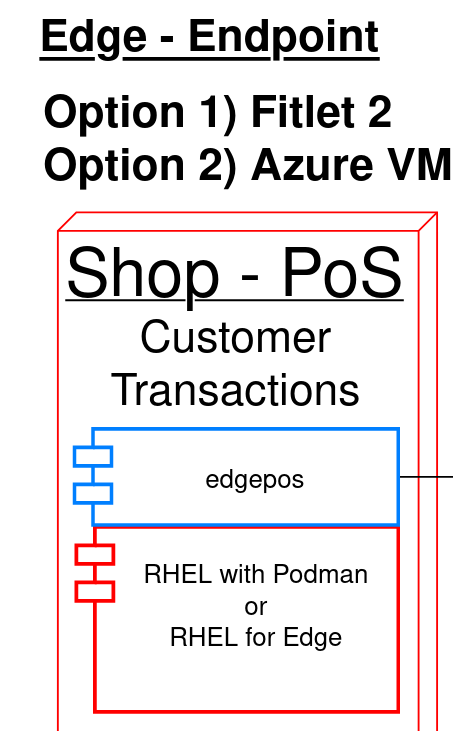

There is the Edge Endpoint device, running a Point of Sale (PoS). There are several of these deployed into each shop building.

There is a Shop “Back Office” which is connected to the PoS edge devices. This is normally a low powered server running in the warehouse in the shop. This part of the architecture is expected to work offline, as the shop’s internet connection goes down quite frequently.

There is also the public cloud datacenter, which provides several services to the shop. When the internet connection to the shop is working, updates are streamed into the datacenter. When the connection goes down, the “Back Office” server will buffer the updates until the connection is restored.

There are some teams in this Hackfest event which did not receive a package containing the hardware - a Fitlet 2 Edge Device and Intel NUC for the Edge Gateway. This is either due to availability, shipping delays, or problems with shipping to your country.

The judges will not penalise you for this, and virtual alternatives to these environments are available. There are also teams who are working fully remotely, and may prefer to use a virtual environment instead.

You do not have full administrative access to the entire cluster of ARO and Azure, but many of the operators you would want to use to install the architecture, and extend it, have been pre-installed for you in the Datacenter cluster. The Hackfest admins (“Service Desk”) will happily install more operators at your request.

Additionally, access to an empty Azure Resource Group per team is available at request - we would ask that if you feel your solution needs to deploy native Azure services, that you are mindful of the costs, as running out of Azure credits halfway through the Hackfest would be a disaster for everyone! If possible, keep as much of your solution inside OpenShift as possible. As a guideline, a “soft budget” of $500 is available for Azure credits per team.

At Hackfest, we won’t tell you what to do, or what direction to take. This is an opportunity to learn by doing, and use the skills you have as a partner to compete in a competition!

With that being said, there are support teams here for you, to help you get past technical blockers, or get more familiar with the technology. Do ask if you are stuck!

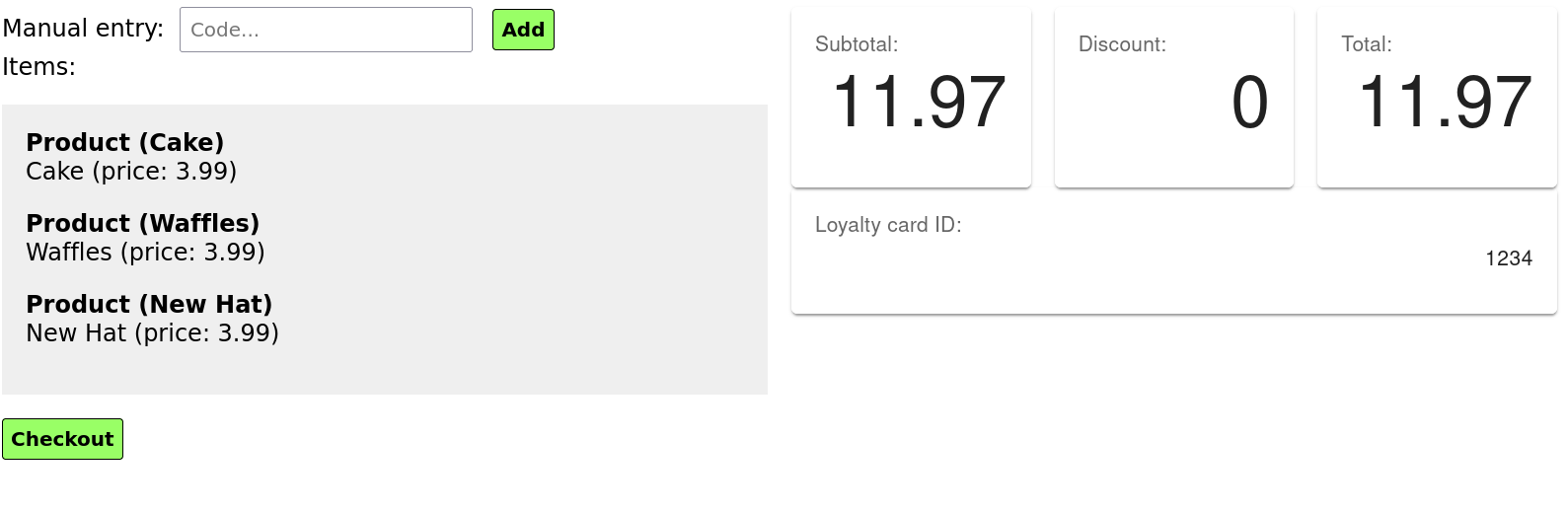

The software running on the edge device runs a single application called edgepos. It has the purpose of being able to process basic transactions on it’s simple web interface, which is operated by Shop employees. The software can handle discounts via a decision table, and records of purchases are sent to the Shop “back office” server which is a bit more powerful.

This hardware running on the edge device is relatively low powered, and there is one at each shop till. It has ethernet, and a few USB devices attached to it, and most shops use old monitors that barely support HDMI 1.0!

The edgepos software was recently upgraded from J2SE running on Windows XP to Quarkus running in a container on Linux, which should be a huge improvement in time.

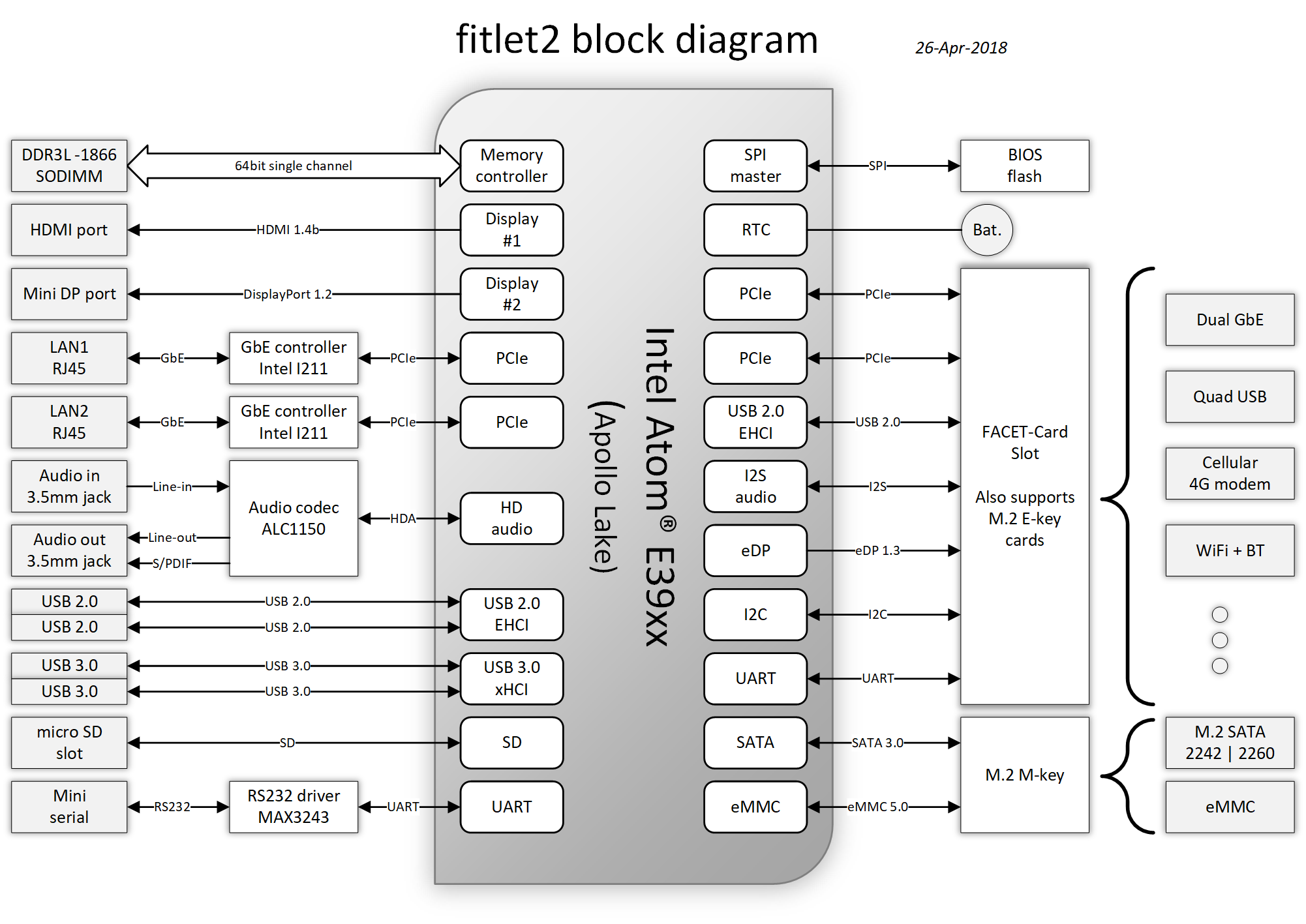

The Hardware of choice for the edge device is the Compulab Fitlet2.

The FITLET2-CE3930 is equipped with Intel® Atom X5-E3930 powered by Intel®.

Model |

FITLET2-CE3930-P36 |

Fan / Fanless |

Fanless |

CPU |

Intel® Atom x5-3930 |

RAM |

1x 2Gb SO-DIMM 204-pin DDR3L Non-ECC DDR3L-1866 (1.35V) |

Display |

Dual head: mini DP 1.2 4K @ 60 Hz; HDMI 1.4 4K @ 30 Hz |

WIFI |

802.11ac dual antenna + BT 4.2 |

Ethernet |

2 GbE ports on-board |

USB 2.0 |

4 USB ports on-board: 2x USB 3.0 + 2x USB 2.0 |

Audio |

Stereo line out Realtek ALC1150 audio codec |

Serial Port |

RS232 mini-serial |

BIOS |

AMI Aptio V |

Input voltage range |

Up to DC 9V – 36V* |

Operating system support |

all |

Additional technical detail can be found at the following link.

A RHEL hypervisor virtual machine running in Microsoft Azure. This machine is pre-installed with QEMU, and has 4 vCPUs, and 16Gb of RAM (somewhat more powerful than the Fitlet 2). This hypervisor machine can be accessed with SSH, and you can use the libvirt / qemu tooling to create virtual machines to simulate a Fitlet 2 / PoS.

This may be a far more viable option if your team gets heavily involved with using FDO to roll out new operating system images, as well - as it will be faster to test than flashing to a SD card.

Please reach out to the “Service Desk” (Hackfest admins) if you’d like one of these machines provisioned for you.

Reminder: Not using RHEL for Edge will lose you many points when it comes to judging time! Standard RHEL for Podman is just useful for getting started quickly at the beginning of Hackfest.

In an edge computing solution, the operating system is required to be efficient, lightweight and mature. The team focused on the most efficient enterprise-grade solution on the marker, which guarantees security, performance, and container-native solutions. Below a list of the principal, compulsory features we’ve been looking for in an operating system:

Must be fully-fledged 64 bit OS (not just its kernel);

Must have a very small memory footprint;

Must be immutable or, at least, modular;

Must have the ability to run a container engine with the minimum

memory footprint, like Podman or CRI-O;

The most suitable and appropriate operating system, also certified on the target hardware is Red Hat Enterprise Linux for Edge (RHEL for Edge).

Podman is a daemonless, open source, Linux-native tool designed to develop, manage, and run Open Container Initiative (OCI) containers and pods.

It has a similar directory structure to Buildah, Skopeo, and CRI-O.

Podman doesn’t require an active container engine for its commands to work.

Last but not least, Podman is available in the standard rpm library of RHEL, so you get full support on it.

The edgepos service is implemented on top of the Quarkus framework. It can run in several different ways, but on RHEL for Edge, it would run native mode on a container environment.

Moreover, the edgepos service is designed and implemented to have the smallest memory footprint as possible and perform the transactions with the API exposed by the shop “back office” in the shortest time. The native mode dramatically improves the performance and guarantees the full compatibility with the OCI standards and the Podman engine.

The edgepos service is responsible for the emulation of a shop “till”, that scans barcodes, and eventually “checks out” those products. Any barcode is valid and will generate a valid product, with a fixed price that is hard coded into the UI - which is very strange for a retail store…

The UI is a simple React application, qiot-project/qiot-retail-edgepos-ui.

This project can be built with npm run build, and the changed files

can should be copied into the qiot-retail-edgepos service, under the

/src/main/resources/META-INF/resources directory.

The edgepos service can by found in the qiot-project/qiot-retail-edgepos project.

When scanning barcodes, or doing a checkout, the UI will call make a

REST API call to /bill, which will emit Kafka messages to a configured

broker.

The Edge Server is based on the powerful Intel® NUC 10 Performance kit - NUC10i7FNH.

The Intel® NUC guarantees performance and stability to the container platform designed to control any IT systems that operate in the store, most importantly the point of sale machines.

Product Collection |

Intel® NUC Kit with 10th Generation Intel® Core™ Processors |

Board Number |

NUC10i7FNB |

Board Form Factor |

UCFF (4" x 4") |

Socket |

Soldered-down BGA |

# of Cores |

6 |

# of Threads |

12 |

Processor Base Frequency |

1.10 GHz |

Max Turbo Frequency |

4.70 GHz |

RAM |

DDR4-2666 1.2V SO-DIMM |

Internal Drive Form Factor |

M.2 and 2.5" Drive |

SSD |

M.2 256Gb |

Lithography |

14 nm |

TDP |

14 nm |

Lithography |

25 W |

DC Input Voltage Supported |

19 VDC |

Additional technical detail can be found at the following link.

The team has provided a detailed walkthrough about How to install SNO on 10th gen NUC here.

As the Intel NUC is designed to run OpenShift in this simulation, an alternative environment is an Azure Red Hat OpenShift environment. This is a fully capable OpenShift cluster, but will have minimal operators installed - compared to the DataCenter cluster, which has many operators installed.

This also may be a far more viable option if you are limited by the speed/availability of of the Intel NUC hardware.

Red Hat has worked a make OpenShift footprint smaller to fit into more constrained environments by putting both control and worker capabilities into a single node. If you are using the shared Shop cluster instead, then your environment is larger than a single node, but it’s functionality is the same.

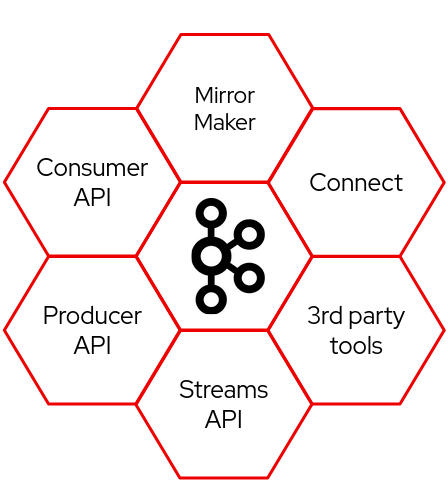

An enterprise distribution of Apache Kafka, Red Hat AMQ Streams is a data streaming platform. It can be installed as a simple service on RHEL, or easily using the Integration operator on OpenShift.

Services can connect to the provider to publish, or receive messages over a channel, making it easy to build event-driven architectures, with loose coupling between services.

Kafka instances can be connected in several ways, but this template architecture uses MirrorMaker2 to connect a Kafka instance running on the Shop Back Office OpenShift to a Kafka instance running in the Datacenter instance of Kafka. This allows messages about purchases to reach the datacenter, even if the connection goes down temporarily.

Kafka is a vibrant ecosystem of supporting services, including Connect (Debezium), and MirrorMaker2.

Cert-manager automates certificate management in cloud native environments and thus helped with the implementation of a dynamic certificate provisioning for edge devices

Cert-manager builds on top of Kubernetes Custom Resource Definitions (CRDs), introducing certificate authorities and certificates as first-class resource types in the Kubernetes API.

This makes it possible to provide 'certificates as a service' to developers working within your Kubernetes cluster.

Highlights: Provide easy to use tools to manage certificates.

A standardised API for interacting with multiple certificate

authorities (CAs).

Gives security teams the confidence to allow developers to self-server

certificates.

Support for ACME (Let’s Encrypt), HashiCorp Vault, Venafi, self signed

and internal certificate authorities.

Extensible to support custom, internal or otherwise unsupported CAs.

+

PostgreSQL is a powerful, open source object-relational database system with over 30 years of active development that has earned it a strong reputation for reliability, feature robustness, and performance.

It is recommended to use the CrunchyDB Operator to install Postgres, which can be configured to expose the transaction logs to services like Debezium (Kafka Connect).

The edgepos-manager service is is implemented on top of the Quarkus framework.

Several instances of the edgepos service can send messages about purchases back to a single edgepos-manager service.

The data model used to talk inbetween the edgepos and edgepos-manager is in the qiot-project/qiot-retail-edgepos-model repository.

More details can be found in the qiot-project/qiot-retail-edgepos-manger repository.

This service is a suggested concept in the architecture, and is NOT implemented (ie, there is no code).

This is because at the moment there is no concept of registration, or security, between the Point of Sale and the edgepos-manager service.

One potential solution to this is mutual TLS, handled by a registration service. However, the implementation is left entirely up to you to decide.

The Datacenter area of the architecture is hosted entirely by a Azure

Red Hat OpenShift cluster. This cluster contains two default namespaces,

team-production and team-development, but you may create additional

namespaces as needed.

This cluster as a largen umber of useful operators pre-installed;

OpenShift DevSpaces operator - providing a “development environment in the cloud” based on visual studio / eclipse Che. This allows for rapid prototyping of new services inside OpenShift.

Red Hat Integration operator, providing AMQ Streams (Kafka), Connect (Debezium), and MirrorMaker2, as well as several other useful resources.

OpenShift Service Mesh Operator. Although, the services that you have today don’t really make use of Service Mesh - which is a real lost opportunity.

Web Terminal operator - making it easy to get access to the oc

command, and other useful Openshift command line tools.

cert-manager - as described previously.

CrunchyDB - as described previously, to install PostgresQL.

Red Hat OpenShift is the hybrid cloud platform of open possibility: powerful, so you can build anything and flexible, so it works anywhere.

Adopting the Openshift container platform made us save tons of hors implementing features and behaviors supposed to be home cooked, otherwise:

Native pipelines using Tekton

One-shot installation using Helm charts

2-day operations using Operator Framework

Container storage

Security and Isolation

Automate cluster scalability

More about OpenShift Container Platform can be found here.

In the forecast of the need for receiving/handling a large number of concurrent messages, A-MQ Streams is the component of choice for streaming messages through the integration and the storage layers.

An internal streaming service guarantees scalability and reliability of the message flow management within the Datacenter business logic.

This design makes it a lot easier to decouple the implementation details of the integration services responsible for offloading (consuming messages from) every topic and storing the values into the storage tier, improving horizontal scalability.

More about A-MQ Streams can be found here.

Debizum is an important part of the Red Hat Inegration and Kafka ecosystem. It is used not just in quarkus, or java projects, but it connects to your database transaction logs, and looks for updates. It can respond to inserts, deletes, updates and changes from any application (as it listens to the database) and respond with a Kafka event.

In modern cloud native architectures, debezium allows you to build loosely coupled microservices and event driven architectures with ease - creating powerful new events simply by editing configuration.

PostgresSQL hardly needs any introduction, it’s one of the most popular open source databases, well known and trustued for it’s scalability and performance in the enterprise space.

Red Hat recommends using the CrunchyDB operator to easily install PostgresSQL on OpenShift, in particular because the operator includes support for exposing transaction logs to Debezium as well. Note that not all PostgresSQL container images support exposing the transaction logs, so, do use the operator!

Prometheus is the defacto time series merics database, which is pervasively used in cloud native architectures - Kubernetes is no exception. While OpenShift includes Prometheus as part of the cluster infrastructure, it is commonly misunderstood how to use that Prometheus instance with your applications.

The short answer, is that you don’t! Leave OpenShift to manage it’s Prometheus, but us the operator to deploy a Prometheus instance just for your application(s). This separates cluster logic and business logic.

You will see at least one Microservice has elementary Prometheus support - consider studying this code and adding Prometheus to all of your services, giving a standard, well supported API to monitor and pull in metrics.

Grafana also should not need much of an introduction, as it’s powerful dashboards are built into OpenShift as well. Much like prometheus, we find customers frequently trying to use the cluster Grafana instance for their applications. Using Grafana is the right idea, but instead, use the operator to deploy a Grafana instance just for your apps, in your namespace. This allows you to build powerful dashboards, charts and graphs to showcase the performance and availability of components of your architecture.

OpenShift recently upgraded DevSpaces to include Visual Studio Code Web - which provides for a hosted, secure, private, container-based IDE in the cloud. Using DevSpaces will not necessarily give you any points at Hackfest, but hopefully you find it useful!

Cert-manager automates certificate management in cloud native environments and thus helped with the implementation of a dynamic certificate provisioning for edge devices

cert-manager builds on top of Kubernetes, introducing certificate authorities and certificates as first-class resource types in the Kubernetes API.

This makes it possible to provide 'certificates as a service' to developers working within your Kubernetes cluster.

Highlights

Provide easy to use tools to manage certificates.

A standardised API for interacting with multiple certificate

authorities (CAs).

Gives security teams the confidence to allow developers to self-server

certificates.

Support for ACME (Let’s Encrypt), HashiCorp Vault, Venafi, self signed

and internal certificate authorities.

Extensible to support custom, internal or otherwise unsupported CAs.

More about Cert-Manager can be found cert-manager.io.

Enable Kubernetes Auth Engine in Vault.

Authorize Vault SA in k8s to Token Review.

Enable PKI engine.

Configure PKI role name in Vault.

Configure PKI policy in Vault.

Authorize/Binding issuer SA to use (policy) the PKI role.

Create Vault Issuer in app namespace with issuer SA.

Cert Manager validates the credentials of the issuer against Vault.

This service is a suggested concept in the architecture, and is NOT implemented (ie, there is no code).

The edgepos service emits events when the cart is updated, and that event contains isCheckout for checkout time. One suggested approach to implementing a central billing and reporting system is to listen for these events in the edgepos-manager service, and then emit a new event back onto the steaming service, so that a sales-manager service can pick up checkouts, totals, and a list of items, for example.

This service is a suggested concept in the architecture, and is NOT implemented (ie, there is no code).

The shop-manager service could listen for new edgepos services being provisioned, and update a central list, or database of shops, and points of sale. The shop manager could also be used to classify different shops, enable features only in certain locations, or at certain times.

The inventory-manager service is is implemented on top of the Quarkus framework.

The inventory manager service is a crude create/list/delete web UI, build ontop of a single postgresql table. This simple implementation allows you to extend it in various different ways.

The design intent of the architecture, is to use Debezium, to do change-data-capture on the database, and emit events back to the streaming API as product prices get updated, or new products are added.

The edgepos servicie can by found in the qiot-project/qiot-retail-inventory-manager project.

This service is a suggested concept in the architecture, and is NOT implemented (ie, there is no code).

The distribution-manager service could help shops and warehouses control how much product they have in a specific location, making it easier to identify stock that is running out, or stock that need a special offer to be sold quickly.